Is (generative) AI literacy an oxymoron? Allowing reliance on language models to read and write for us could have a devastating effect on our ability to read and write ourselves.

So. AI literacy.

I’ll be the first to admit that I do not have it… whatever it is.

I’m not a prompt engineer. I don’t know the best words to choose, or the best framework for delegating to a generative algorithm. But is that AI literacy?

Digital Promise suggests that AI literacy is about being able to “understand, evaluate and use” AI systems and tools (reading between the lines, I suspect they are referring specifically to GenAI — we hardly expect everyone to be able to use or evaluate the AI systems powering predictive analytics or AI pattern recognition). Silvano says it’s being able to to “interact critically and meaningfully” with them. Those words “evaluate” and “critical” are kinda massive to me, because in context, they imply that an evaluation of AI tools should result in the positive decision to use/interact with those tools (but, you know, carefully).

See, I’ve done my evaluation, and I’ve made the choice not to use GenAI. I think the ethical problems are just too significant.1 At this point, it feels a bit like being a vegetarian, or being a non-drinker: nobody wants to hear your reasons unless they agree with you, and you won’t change their minds because (a) they immediately get defensive, and (b) they just like it, okay? It tastes good. It feels good. Everyone else is doing it — it’s just easier to live in the world.

But AI use is being framed not as a “lifestyle choice”, but as a “literacy”.

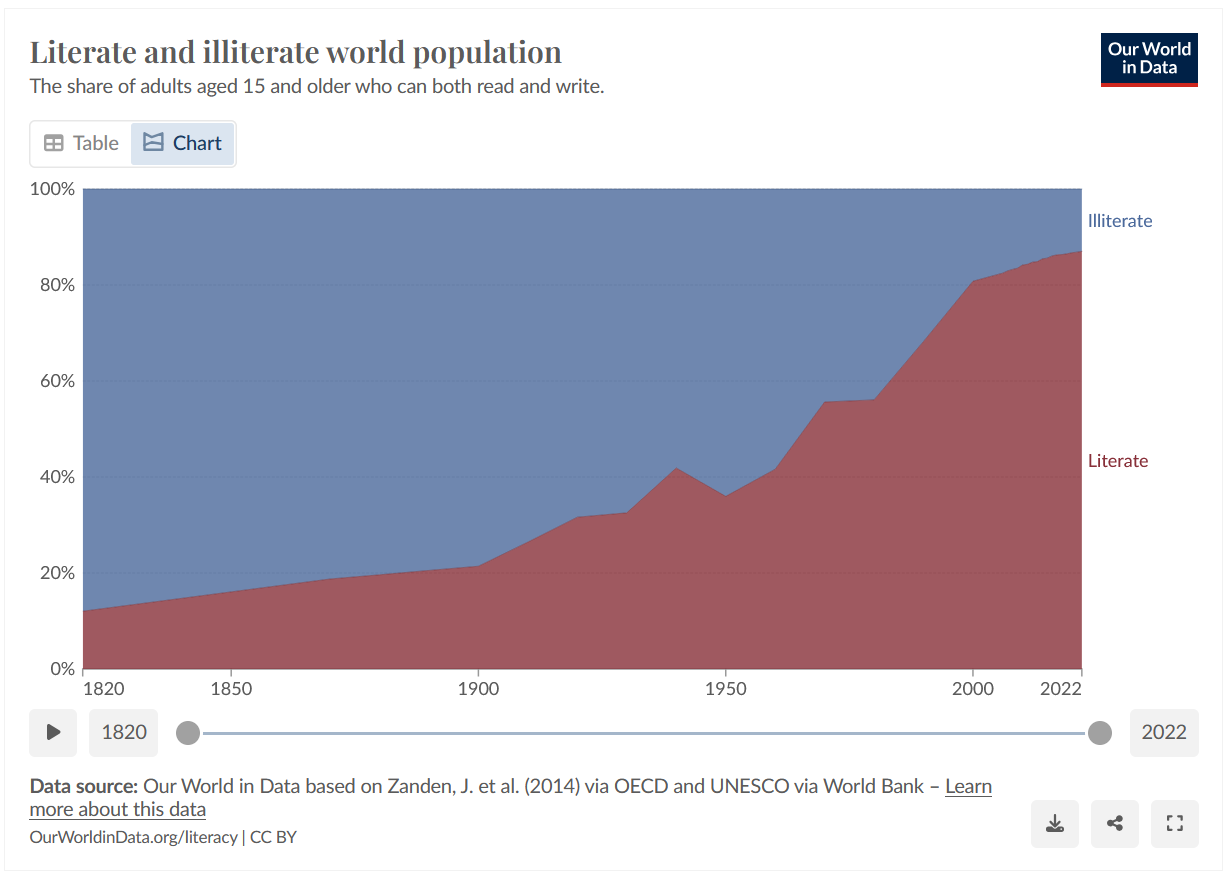

Literacy is a concept that’s been toyed with quite a bit over the past 50 years or so. Literacy just used to mean “being able to read and write”.

It’s now more broadly considered to be an essential social practice that enables a person to participate successfully in society. We now acknowledge the importance of oral and digital communication as components of literacy. We also talk about other types of literacy, like health literacy, financial literacy, information literacy. These aren’t just curriculum areas (nobody’s talking about geography literacy or physics literacy). They’re the essentials. Stuff people need to get on in the world.

So I ask — do we now need to use AI to get on in the world?

What happens if we don’t?

What happens if we do?

Remember that, once, literacy meant being able to read and write. If it becomes a global norm to offload reading or writing to GenAI tools, will literacy itself begin to fall again?

Without making a big noise in the main post, here’s an expansion of those ethical concerns:

Natural resource use by the biggest commercial organisations on the planet that has reversed their Net Zero strategies and led to their seizure of more and more land to make room for data centres and consume water for cooling.

Ongoing intellectual property theft on an unknowably vast scale, processed in a way that makes data origins impossible to identify.

Generation of potentially harmful “content” containing biases, misinformation, “hallucinations” (a misleading word to use about an algorithm that can’t actually see and is therefore only ever hallucinating).

Offloading and diminishing the acts of reading, writing and speaking which are fundamental to human communication, and replacing them with statistically-average, bland “content”.

The already-occurring problem of “model collapse”, in which GenAI models raised and fed on the data generated by previous ones begin to produce, then amplify, errors and distortions (think of cows being fed cow meat and developing mad cow disease, or inbreeding resulting in genetic disorders).

I actually couldn’t care less about the “problem” of students cheating with AI. Students cheating on their assessments is a symptom of their having already been failed by a toxic education culture.

Leave a comment