This is a reflection on what we want, what we need, and the implications of both when we delegate our tasks to technological agents.

Which are primary – needs or wants?

So I’ve been thinking about this… a lot… and I think it’s wants.

Needs are instrumental things: if you need something, you need it for a reason. I need access to clean water so that I can drink it. I need my glasses to see the road. I need my organs because they keep me alive.

But the chain of needs can only end with a want. I need clean water because I need to drink it because I need to keep my body hydrated because I need my organs to keep functioning… because I want to live.

Maybe you don’t want to live. Maybe, actually, you believe you need to live… because. Because you can only protect your children if you are alive. Because you can only reach the top of Mt Everest if you are alive. Because you need to be alive if you’re going to do your life’s work.

And all of those are wants. There have to be wants, or we don’t have any needs.

This is a chain of existential reasoning that drives many depressed people to despair and nihilism. I should know – I am, cyclically, one of them. During my low periods (long stretches every one or two years), I feel as though I don’t want anything at all. And this is not Nirvana, it is a waking nightmare. My “rational” brain, empty of desire, tells me I really don’t have any reason to be here. It’s crippling. I’ve only had one period in my life when I would stay in bed all day because I couldn’t think of a single reason to get out of it – but I deeply understand those who experience this.

I’m not happy I experience this, but I do think it’s valuable knowledge to share, for many reasons.

Here’s just one, and surprise surprise, it’s related to genAI.

AI time

OpenAI’s GPT-3 was initially released in mid-2020. At the time, it was pretty cool to machine learning engineers, but not many others. It took more than two years to reach 1 million users. In late 2022, ChatGPT was released and reached 1 million users in 5 days.

What was so special? Not the technology. That had already existed for a long time, and clearly wasn’t powerful enough to meet many unmet needs. No, it was the user interface that made the difference. With a first-person “voice” and theatrical “typing in progress” animations, ChatGPT felt good to use. People wanted to feel good.

Today, ChatGPT apparently handles about 10 million user queries per day. (The stats are kinda dubious as people just seem to be replicating the same numbers from site to site.) And every day I wake to another debate about what genAI should, shouldn’t, can and can’t be used for.

Now, I’m not a machine learning engineer. I don’t know enough to argue the nuanced case against its performance capabilities. But I know enough about value, creativity and existentialist philosophy to argue a fierce case for keeping a goddamn lot of work in house.

And look, here it is. It all comes down to what we want to do and be in the world.

Let’s break it down.

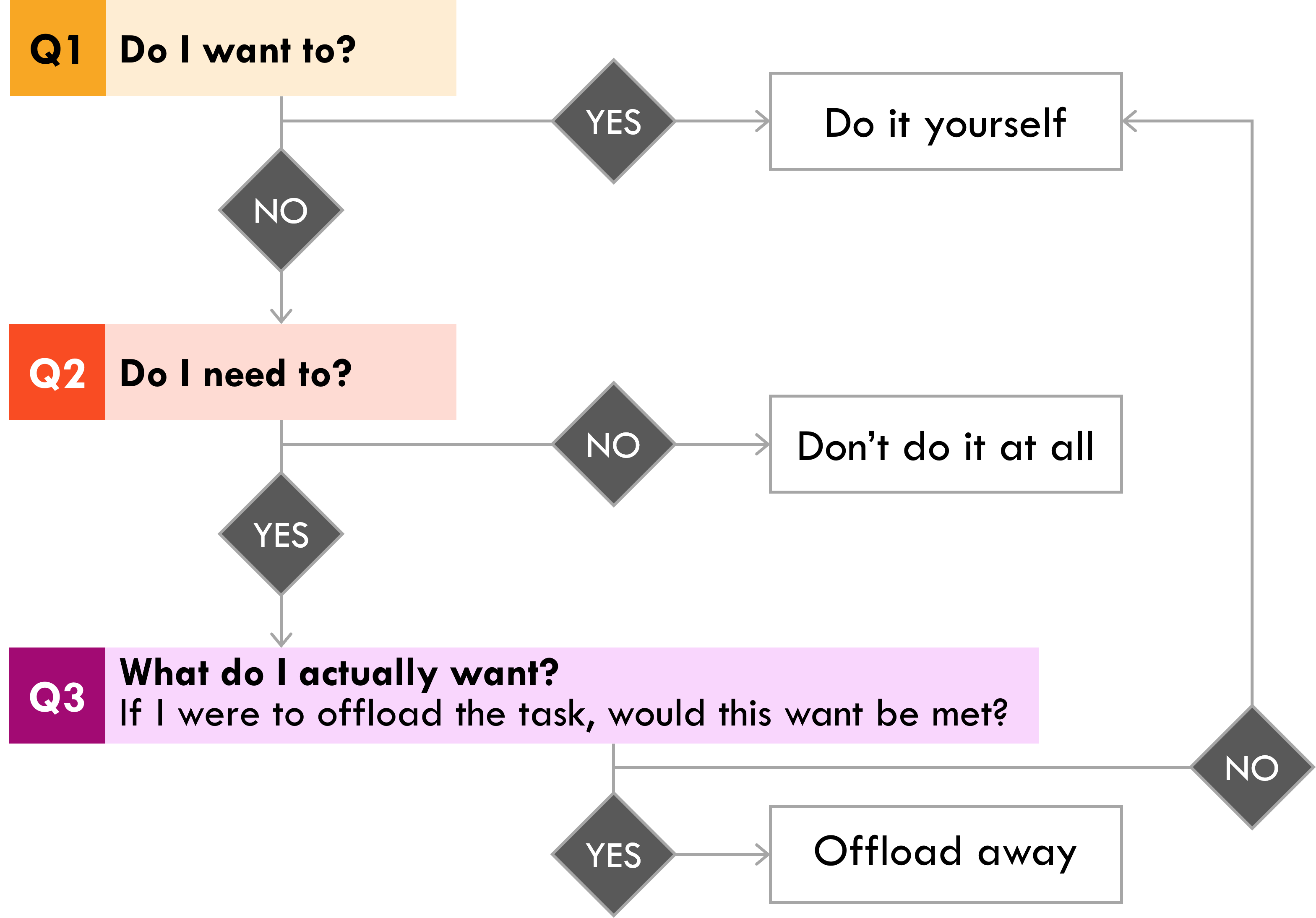

Question 1: Do I want to?

Do I want to spend 2,000 hours a year cataloguing receipts? Do I want to spend 6 hours a day answering my child’s exhausting questions about everything he sees? Do I want to compile that report, answer that email, paint that picture, learn that skill, write that song?

The answer is sometimes yes, sometimes very no. Want is a capricious thing. And if the answer is no, the next question is not “hey Copilot, can you help me out”. It’s:

Question 2: Do I need to?

This is a delicate question. If no, I don’t need to, then hey, by all means, I can just stop. I don’t need to offload to AI or anything else – just stop. But if the answer is yes, I have to be careful not to conclude “well, if I don’t want to but I need to, I should be able to outsource it”. Instead, I ask:

Question 3: What do I actually want?

And will this want be met if get it done a different way?

Because maybe I don’t want to do it, but I do want something else that comes from doing it. I really don’t want to be my kid’s1 encyclopedia – it’s tiring, tedious, and kind of embarrassing when I have to say “I don’t actually know”.

But I feel I shouldn’t be sending him off to look everything up on Google because the internet is full of weird stuff and I want him to find good answers, not horrific 4chan garbage; and if I’m honest with myself, I know he’s asking me because he respects me and he wants my attention. I do actually love him and want to give him attention when I can, because one day he’ll be 15 and find me so uncool.

If it was just the relationship reason, I could probably offload to Google sometimes but not always. The need would be met, and my real want (a relationship with my kid) would also be met. But I’m still not so comfortable about what he’s going to find on Google, so I need a solution that’s going to meet my other want (for my kid to get good answers to his questions).

This is where use cases come from. And I guess what I’m finding really frightening is that our actual wants are getting drowned out in the most senseless way by a barrage of messaging telling us we need to adopt genAI to keep up, to mitigate the risks of being left behind, to stay competitive, to be efficient, to ensure our businesses are successful.

Instead why aren’t we asking ourselves whether the wants implicit in these “needs” are really our wants at all – and even if they are, is using genAI the best way to meet them?

As the swift, embarrassing failure of Google AI Search this week has shown us, genAI is not proving a particularly good way to meet our supposed wants. I’d be far, far less likely to have my imaginary son using Google AI for answers than the standard search tool.

It’s a moment that has exposed that many AI use cases are not meeting our needs or wants – so whose are they attempting to meet? It seems fairly clear that the main need being serviced was Google’s need to appear active, relevant and productive in the age of AI. The urgency surrounding this need led the company to release a tool displaying serious performance flaws and transparently unethical development practices. The developers were in too much of a hurry to train or test the algorithm properly, and the stakes were very, very high.

Of course, using our little framework above, let’s turn that final Question 3 back on Google: what does Google2 really want out of this?

To be clear, this kid is hypothetical. I have a cat and he really doesn’t care.

Lest anyone think I’m picking on Google, this question could equally apply to Microsoft’s Copilot+ Recall team or the idiot at OpenAI who decided to steal Scarlett Johansson’s voice last week.

Leave a comment